I used Google Pixel 2 as my primary phone for about 3 years. To say that the photos this phone took were impressive would be an understatement. Sometimes they were downright incredible.

So couple days back, when I came across a recently published patent application from Google about the Pixel’s camera, I could not help but read it in fine detail. Goes without saying, the tech behind it is genius, otherwise why would I be writing an article about it? Let’s dive in.

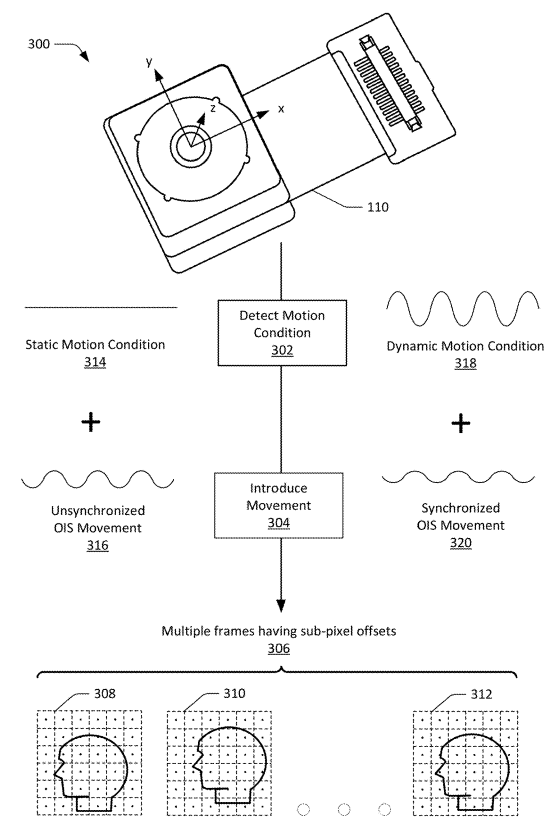

The underlying idea described in this patent is an unusual combination of two technologies: optical image stabilisation (OIS) and super-resolution. Let’s take a quick look at each of them and then see why I said the idea is unusual.

OIS was originally developed to counter the inadvertent movements of your hands while clicking a picture — which often lead to blurry pictures. Essentially, OIS works by giving the camera assembly some “leeway” in moving independently (to some extent) of the smartphone. The independent movement provided by the stabiliser is of course limited, but this just needs to happen for a split second — the time it takes for the camera to capture a picture.

Coming to super resolution: it is a software technique. Basically, it is a way of merging multiple photos of the same scene into one, more clear and high-resolution image. Now, for this to work, the photos need to be taken from slightly different angles or positions. These pictures can be obtained, for example, by capturing them while you are pressing the shutter button. The phone is moving a little anyway during this time due to your hand’s movement— so those images get captured slightly different angles/positions. Watch this video to learn more about Google’s approach on super-resolution:

Now, it may seem counter-intuitive to combine these two techniques because OIS eliminates movement of the camera assembly whereas super-resolution depends on it.

Yet, Google has used these technologies together and that too to their advantage. They did it through a complex interplay between the movement caused by your hand and the movement created by the stabiliser system.

The hand’s movement sensing does not require anything new. Your phone already has sophisticated motion sensors, which are used to detect events like you picking your phone or flipping it sideways to watch a video. Another use of these sensors is to count your steps in the health app.

The motion data gathered from these sensors is sent over to the OIS assembly. OIS system would ideally counter these movements as much as it can. However, in this case, instead of cancelling those movements, the OIS system coordinates the movement of the camera assembly in such a way that jitters are cancelled but the images for super-resolution algorithm are captured from sufficiently different angles and positions.

Once these images are captured, they are merged to create a higher resolution image by using AI techniques, which extract information from these images to create a single, bigger, and clearer image.

If you liked this article, please subscribe to our weekly newsletter for more such stories.